- Nltk tutorials clean text data movie#

- Nltk tutorials clean text data full#

- Nltk tutorials clean text data code#

Self.words = nltk.word_tokenize(self.text) """Replace contractions in string of text""" Soup = BeautifulSoup(self.text, "html.parser")ĭef remove_between_square_brackets(self):

Nltk tutorials clean text data full#

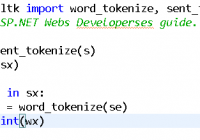

So my full definition of the class looks like this: (example and many functions are from KDNugget) import re, string, unicodedataįrom nltk import word_tokenize, sent_tokenizeįrom nltk.stem import LancasterStemmer, WordNetLemmatizer > sample text then strip html then remove between square brackets then remove numbers” Full implementation

Nltk tutorials clean text data code#

We can read out our code too - just read the dot. This makes our code readable and easy to manipulate. I have to go get 2 tutus from 2 different stores, too. There are a lot of reasons not to do this. This is a great little house you've got here.

Nltk tutorials clean text data movie#

My favorite movie franchises, in order: Indiana Jones Marvel Cinematic Universe Star Wars Back to the Future Harry Potter.ĭon't do it. Why couldn't you have dinner at the restaurant? ¡Sebastián, Nicolás, Alejandro and Jéronimo are going to the store tomorrow morning! Let’s create a snippet of text as an example: sample = """Title Goes Here But that can already never be correct - the Singleton pattern is by now widely recognized as an anti-pattern, and GoF authors said they would remove it, if only they could go back in time.īut we can definitely hack our way around this using Python Class Design In other words, it’s like saying that when OOP was born, it was also born with the Gang-of-Four design patterns baked into it’s core as its backing theory of thought (outside of types and inheritance and methods etc.), and every implemented OOP language included these patterns and abstractions by default for you to take advantage of, and that these patterns were bullet-proofed by centuries of research. However, there is no really good equivlent in Python because the natural different of Python and R: (Long but very good read) When using R, the pipe operator %>% kind of taken care of the most part. Do you want to remove certain words first then tokenize the text? Or tokenize then remove the tokens? What we need is a clear to understand and yet flexiable code to do the pre-processing job. Often times, the order of how you do the cleaning is also critical. For example, different stopwords removal, stemming and lemmization might have huge impact on the accuracy of your models.

When building NLP models, pre-processing your data is extremely important. In Data Science, 80% of time spent prepare data, 20% of time spent complain about need for prepare data.- Big Data Borat February 27, 2013 This numbers come from the fact that search in list is O(n) and searching in a set takes a constant amount of time, often referred as O(1).Well, I think it all start with one of my favorite tweets from 2013: Basically the larger the polynomial the less efficient the algorithm, in this case O(n*m) is larger than O(n + m) so the list_clean method is theoretically less efficient than the set_clean method.

The O(n*m) and O(n + m) are examples of big o notation, a theoretical approach of measuring the efficiency of algorithms. For the given example the set_clean is almost 10 times faster. The first time corresponds to list_clean and the second time corresponds to set_clean. In the code above list_clean is a function that removes stopwords using a list and set_clean is a function that removes stopwords using a set. Print(timeit.timeit('set_clean(text)', 'from _main_ import text,set_clean', number=5)) Print(timeit.timeit('list_clean(text)', 'from _main_ import text,list_clean', number=5)) Set_stop_words = set(stopwords.words('english')) Let's compare both approaches list vs set: import timeit Using a list, your approach is O(n*m) where n is the number of words in text and m is the number of stop-words, using a set the approach is O(n + m).

0 kommentar(er)

0 kommentar(er)